Introduction

Apache Kafka is one of the most popular event streaming platforms in modern software industry. It supports both open-source and commercial options, which makes it a good choice for handling large-scale message streaming and real-time data processing applications. Kafka is designed based on the Pub/Sub (Publisher/Subscriber) model, which enables the exchange of the messages seamlessly in between producers and consumers.

From this article, we’ll discuss about the Kafka installation process, configuration, and provide steps for Kafka topic creation, producer-consumer setup, and integration with Spring Boot.

1. Download Apache Kafka

- As per the first step, let's visit the Apache Kafka official website and click the "DOWNLOAD KAFKA" button located in the top navigation bar.

2. Then you'll be redirected to Apache Kafka Download Page. Once on the download page, select the latest stable version of Kafka (we recommend using the most recent version) and download the binary version.

2. Install and Configure Kafka

- Extract(Unzip) the Kafka zip file you downloaded.

- Move the extracted folder(unzipped folder) to the C: drive and rename it as kafka (For example:

C:\kafka). Important: Avoid deeply nesting the Kafka directory (don't go deeper inside the C: drive to make the URL more complex) to prevent potential errors like “The input line is too long when starting Kafka.” -

Before getting started with Kafka, a few configuration changes are required in the below property files.

- Configure

zookeeper.properties- Navigate to

C:\kafka\configand open thezookeeper.propertiesfile. - Find the

dataDirproperty and update it to the following:dataDir=C:/tmp/zookeeper

- Navigate to

- Configure

server.properties- Similarly, open the

server.propertiesfile in theC:\kafka\configfolder. - Locate the

log.dirsproperty and change it to:log.dirs=C:/tmp/kafka-logs

- Similarly, open the

- Configure

3. Start the Kafka Server

Once Kafka is configured, it’s time to start the services. Please visit the Apache Kafka QuickStart for more information.

Step 1: Start Zookeeper Service:

Open a command prompt in the C:\kafka directory and run the following command to start.

Command: .\bin\windows\zookeeper-server-start.bat .\config\zookeeper.properties

Step 2: Start Kafka Broker Service:

In a seperate terminal window, still within the C:\kafka directory, execute the following command to start the Kafka broker:

Command: .\bin\windows\kafka-server-start.bat .\config\server.properties

Once the services are successfully started, Kafka will be running on port 9092.

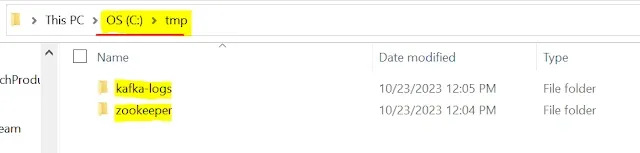

After executing these above commands you may see that as per our above configuration a new tmp folder is created inside the C: drive and new 2 folders are generated inside the temp folder to maintain logs.

4. Create a Kafka Topic

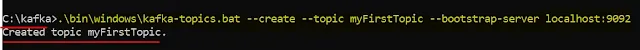

Open a new terminal window and run the following command to create a new Kafka topic:

Command: .\bin\windows\kafka-topics.bat --create --topic myFirstTopic --bootstrap-server localhost:9092

Step 2: Create a Topic with Custom Partitions:

For more advanced configurations, you can specify the number of partitions and replication factor:

Command: .\bin\windows\kafka-topics.bat --create --topic partitioned-kafka-topic --bootstrap-server localhost:9092 --partitions 3 --replication-factor 1

5. Create Kafka Producers and Consumers

- Kafka uses the Pub/Sub (Publisher/Subscriber) pattern to handle events and message streaming process.

- To add messages on the Kafka topic we need to create a Producer (In Kafka world Producer is the word using for Publishers)which produces the message and a Consumer (In Kafka world Consumer is the word using for Subscribers)to consume that sending message by listening to that Kafka topic.

5.1 Create a Kafka Producer

To produce messages to the Kafka topic, run the following command:

Command: .\bin\windows\kafka-console-producer.bat --topic myFirstTopic --bootstrap-server localhost:9092

Now, you can enter messages, and they will be sent to the myFirstTopic Kafka topic.

5.2 Create a Kafka Consumer

To listen for messages produced to the Kafka topic, open another terminal and run:

Command: .\bin\windows\kafka-console-consumer.bat --topic myFirstTopic --from-beginning --bootstrap-server localhost:9092

After creating both Producer and the Consumer, you may try out sending some messages to the Kafka topic from the Producer to see whether the Consumer is able to consume those messages through the Kafka Topic demonstrating real-time streaming.

6. Monitor Kafka Topics and Partitions

To better visualize and monitor your Kafka topics and partitions, you can use a Kafka management tool. This allows you to manage topics, consumers, and brokers through an intuitive graphical interface.

Download Kafka Tool:

Visit the following link to download Kafka Tool: Kafka Tool Download

This GUI tool will help you interact with your Kafka instance and manage your topics, partitions, and messages with ease.

7. Kafka integration with a Sample Spring Boot Project

Creating a Kafka Producer and Consumer using Spring Boot

For a complete example of Kafka integration in a Spring Boot project, you can refer to following GitHub repositories:

- Kafka Producer Example: Spring Boot Kafka Producer

- Kafka Consumer Example: Spring Boot Kafka Consumer

By referring to these repositories you'll be able to understand how to do the necessary configuration for both Kafka producers and consumers within a Spring Boot application.

0 Comments